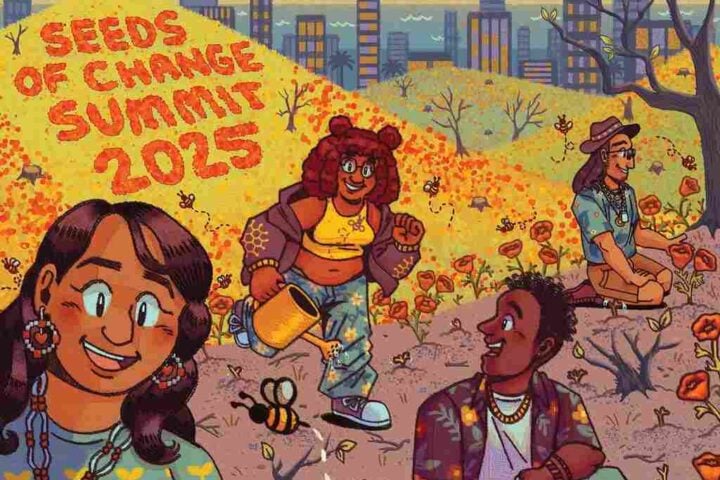

PauseAI has already held protests around the world in cities like San Francisco, New York, Berlin, Rome, and Ottawa. Pause AI is a group of activists petitioning for companies to pause development of large AI models which they fear could pose a risk to the future of humanity.

It’s now clear that artificial intelligence is here to stay. OpenAI continues to expand ChatGPT, and competitors are also developing their large language models. But not everyone seems pleased. The PauseAI movement, for instance, is calling for a halt to some AI developments. But why?

On May 13, 2024, PauseAI demonstrators were in front of the British Department for Science, Innovation, and Technology in London.

Members of PauseAI see the development of artificial intelligence as a threat. Their website states: “We are a community of volunteers and local communities coordinated by a non-profit that aims to mitigate the risks of AI (including the risk of human extinction).”

Their protests coincide with OpenAI’s announcement of a new ChatGPT version designed to mimic human behavior more closely. Joep Meindertsma, PauseAI’s founder, criticizes the AI Safety Summit’s outcomes, but remains hopeful, citing international technology bans like the Montreal Protocol’s phaseout of CFCs and treaties banning blinding laser weapons. “We’ve managed international bans before. I believe we can pause AI too,” he says.

The movement’s name suggests its goal: to pause AI developments, though not all of them. PauseAI members are particularly concerned about AGI, or Artificial General Intelligence. This term refers to AI systems more powerful than GPT-4. The reason: Humanity doesn’t yet know how to control these tools. There aren’t enough institutions and structures to guarantee their safe use. The group also wants all UN member states to sign a treaty that sets up an international AI safety agency with responsibility for granting new deployments of AI systems and training runs of large models.

It’s known that generative AI, which can create independent things, already poses challenges to today’s society. For instance, AI-generated fake news is circulating on the internet, appearing as if they were from Tagesschau. Deepfakes also present dangers. Additionally, AI tools can discriminate if not properly trained, as the training data itself contains biases.

Similar Posts

PauseAI’s most extreme prediction looks further into the future: its followers fear that a superintelligent AI could mean the end of humanity. They are not alone in this belief. AI researchers estimate a 14 percent average probability that a superintelligent AI, more intelligent than humans, could have very severe consequences. Since such systems would have internet access, they could hack other devices, according to PauseAI. This could lead to targeted manipulation of people or even the triggering of nuclear weapons.

To prevent this, all work leading to AGI should be stopped. However, this excludes virtually all current AI systems, according to the movement itself. PauseAI currently doesn’t have much momentum. On the group’s Discord, some members discussed the idea of staging sit-ins at the headquarters of AI developers. OpenAI, in particular, has become a focal point of AI protests. In February, Pause AI protests gathered in front of OpenAI’s San Francisco offices, after the company changed its usage policies to remove a ban on military and warfare applications for its products.