Table of the Contents:

- Balance of Innovation and Ethical AI Use Across Europe

- AI Risk Classification and Restrictions

- Governance and Enforcement

- Global Impact and Current Trends

- Future Prospects and the Inevitable Transition

Balance of Innovation and Ethical AI Use Across Europe

The Council of the European Union has just definitively approved the world’s first law to moderate the development, production, and use of systems based on artificial intelligence (AI). Authorities in the bloc claim that the regulation ” can set a global standard for AI regulation.” The legislation was authorized by the European Parliament in March. Following the Council’s decision, it will be published in the region’s Official Journal. The requirements and conditions of the reform will apply two years later “with some exceptions for specific provisions.”

“The new law aims to foster the development and uptake of safe and trustworthy AI systems across the EU’s single market by both private and public actors. At the same time, it aims to ensure respect of fundamental rights of EU citizens and stimulate investment and innovation on artificial intelligence in Europe,” says the Council.

More than 150 companies accuse the AI Law of being a threat to technological sovereignty. Europe’s largest companies signed an open letter to express their dissatisfaction with the AI Law approved this month by the European Union.

AI Risk Classification and Restrictions

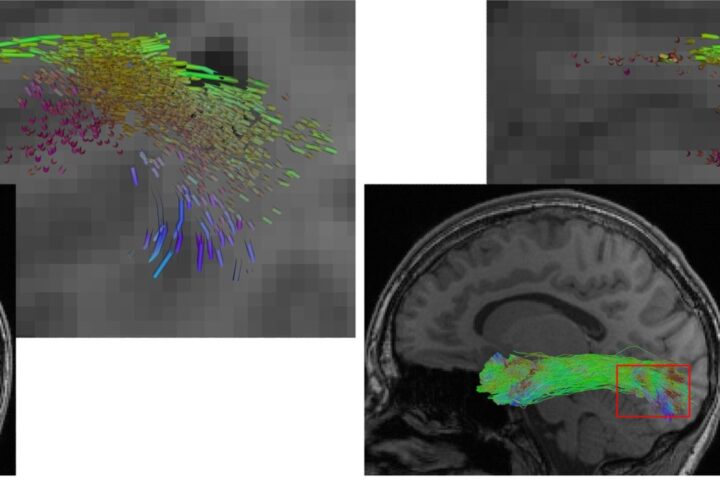

The legal framework classifies different types of artificial intelligence based on their “higher the risk to cause harm to society.” It includes the categories: limited risk, high risk, and unacceptable risk. The first category considers resources such as assistance bots or analysis solutions that will have to meet “very light transparency obligations.” The second category is intended for biometric products, facial recognition, or those used for educational and labor market purposes. Developers of these applications must register their systems in a census and meet stricter threat management and quality obligations to operate in the European market. The third level lists programs used for cognitive-behavioral manipulation and social scoring. These will be completely banned in Europe.

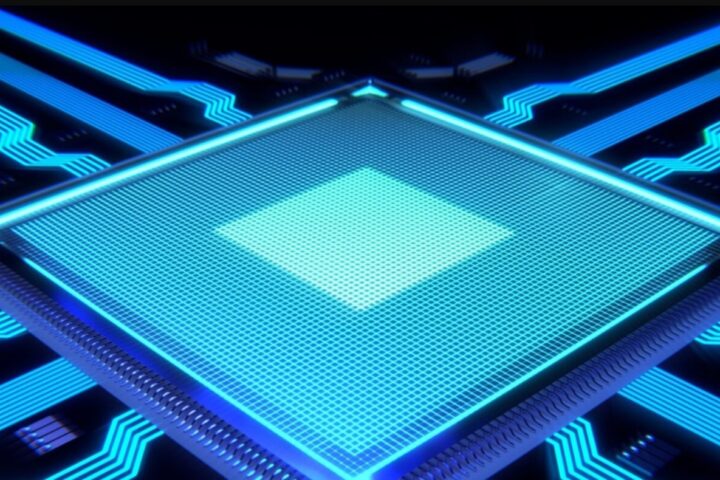

“The law also prohibits the use of AI for predictive policing based on profiling and systems that use biometric data to categorise people according to specific categories such as race, religion, or sexual orientation,” authorities say. The reform includes a section for general-purpose AI models (GPAI) like GPT-4 or PaLM 2. Most of these will have to meet limited transparency provisions. Those attributed with high-impact capabilities and posing a “systemic risk” will face stricter regulation. This last classification will include GPAI trained with computational power exceeding 10^25 floating-point operations per second (FLOPS).

Governance and Enforcement

Large technology companies that do not comply with the rules will be fined up to 7% of their global turnover. Startups, small and medium-sized enterprises will be subject to administrative fines proportional to the size of their business.

The proper application of the law will be guaranteed through a new governance structure. The architecture will be headed by the AI Office, an organization that will be integrated into the European Commission and responsible for enforcing common rules across the territory. Its work will be supported by the AI Board, composed of representatives from the bloc’s member states, a panel of independent expert scientists, and an advisory forum allowing companies and other interested parties to share technical advice for the proper implementation of the reform.

Global Impact and Current Trends

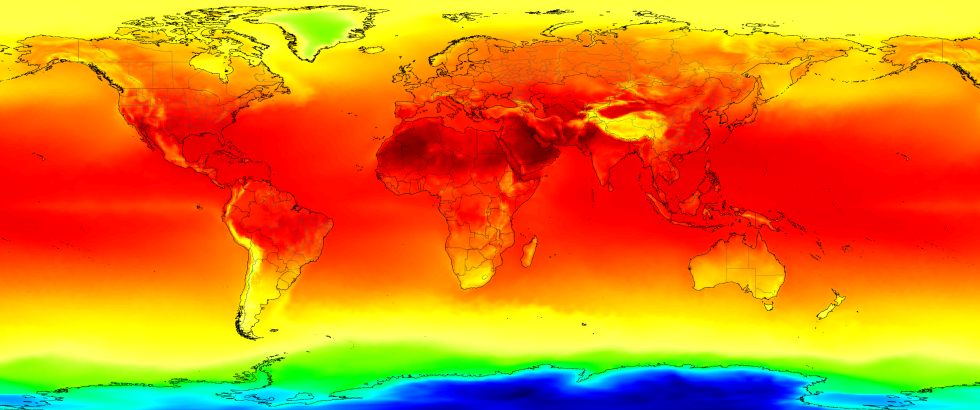

Analysts suggest that the approval of the AI Law in Europe could dictate new competition parameters in the emerging global market. The regulation opens a window for European AI startups to have more opportunities to compete with the large U.S. corporations that dominate the industry.

The European Union is not the only one seeking to regulate the use and development of AI systems. U.S. authorities have presented some proposals in this regard, although most of the progress has been left to the sector’s large companies. Since last August, China has had a legislative framework for the use of generative AI, although it lacks more extensive regulations that consider the development and advancement of the technology. In Mexico, 31 initiatives have been presented to the Senate to regulate artificial intelligence, but only two propose constitutional reforms to moderate it.

The launch of ChatGPT by OpenAI highlighted the lack of detail in existing legislation to address the advanced capabilities of emerging generative AI and the risks related to the use of copyrighted material. This led officials to recognize the need for more specific regulations.

Mathieu Michel, Belgian Secretary of State for Digitalization, assures that “this landmark law, the first of its kind in the world, addresses a global technological challenge that also creates opportunities for our societies and economies.With the AI act, Europe emphasizes the importance of trust, transparency and accountability when dealing with new technologies while at the same time ensuring this fast-changing technology can flourish and boost European innovation.”

Similar Posts

Future Prospects and the Inevitable Transition

Although an agreement was reached on the AI Act, there is still a long way to go for its effective implementation and enforcement. Restrictions on general-purpose systems will not start until 12 months after the AI Act comes into effect. Meanwhile, any commercially available generative AI system, such as ChatGPT, Google’s Gemini, and Microsoft’s Copilot, will have a “transition period” of 36 months from the AI Act’s effective date to comply with the legislation.

High-risk AI applications are also covered by the new law. These include autonomous vehicles and medical devices, which will be assessed for the risks they pose to health, safety, and citizens’ fundamental rights. Additionally, AI applications in financial services and education will be evaluated where there is a risk of bias embedded in AI algorithms.

Finally, Michel emphasized that with this law, Europe reaffirms the need for AI technology to progress in a way that is beneficial and ethical for all. This European commitment to transparency and responsibility in AI is designed not only to regulate but also to incentivize responsible innovations in the field.